Social reasoning is a core part of human intelligence.

Indeed, Homo Sapiens outcompeted Neanderthals, despite having smaller brains, with superior social intelligence.

Yet if we want LLMs that are more socially intelligent than humans, they fall short in significant ways.

For example, LLMs have inferior theory of mind, they are not as emotionally intelligent, and are worse negotiators.

This is because they are trained mostly on static data.

And existing RL environments mostly focus on computer use, coding agents, or training robots — and are mostly developed by humans.

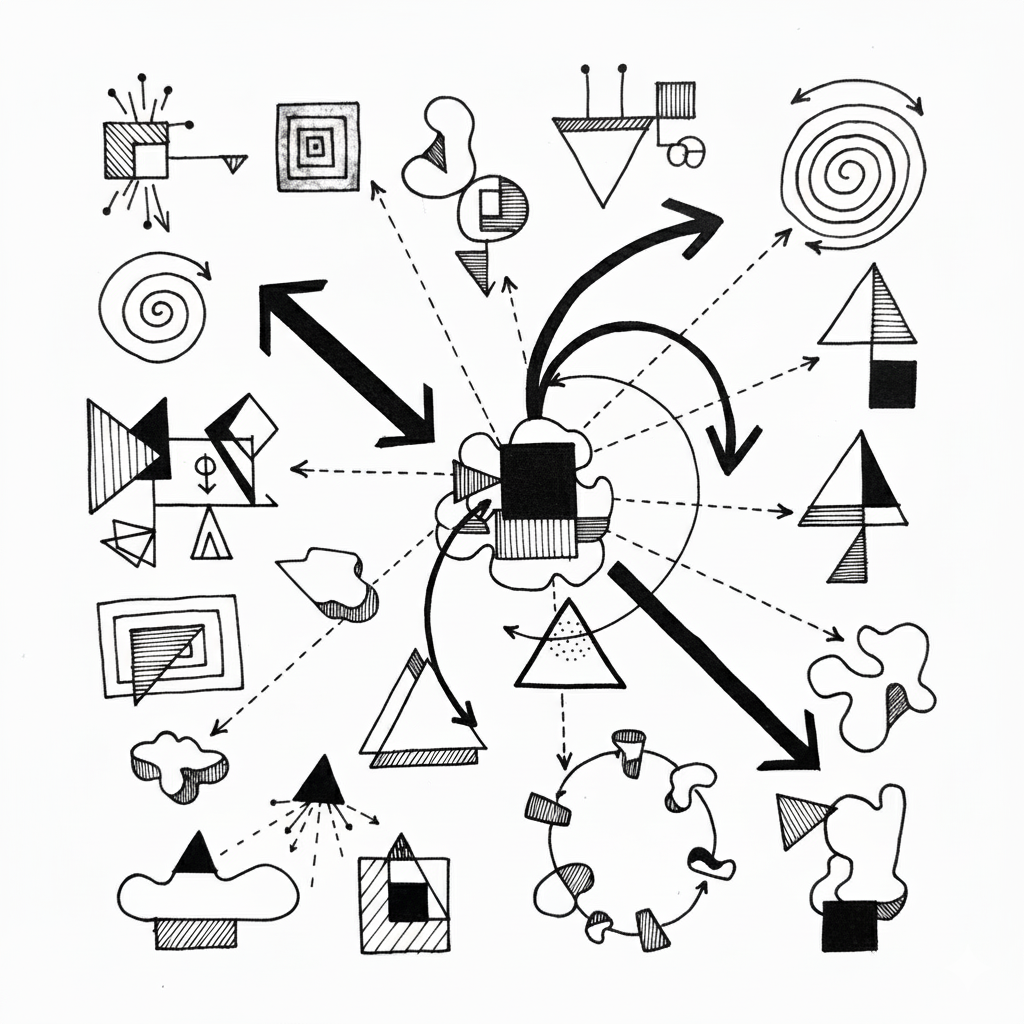

We are building one million dynamic social environments to improve LLMs' social reasoning.

We are creating these environments using a novel unsupervised generation method, meaning that our approach will scale with compute.

Our big bet is that we'll see emergent social reasoning capabilities in agents.

We're already seeking sparks of this in our initial RL runs.

…

Eventually, we want to go beyond social reasoning to build whole simulated worlds to train agents that provide insight into our own.

Nathan Cloos: nacloos@mit.edu

Joshua Levy: joshua_levy@hks.harvard.edu